What can we learn from past anxiety over automation?

– Daniel Akst

The automation crisis of the 1960s created a surge of alarm over technology’s job-killing effects. There is a lot we can learn from it.

In Ulysses (1922), it’s been said, James Joyce packed all of life into a single Dublin day. So it shouldn’t be surprising that he found room in the novel for Leopold Bloom to tackle the problem of technological disruption:

A pointsman’s back straightened itself upright suddenly against a tramway standard by Mr. Bloom’s window. Couldn’t they invent something automatic so that the wheel itself much handier? Well but that fellow would lose his job then? Well but then another fellow would get a job making the new invention?

Notice Bloom’s insights: first, that technology could obviate arduous manual labor; second, that this would cost somebody a job; and third, that it would also create a job, but for a different person altogether.

Surprisingly few people have grasped this process as well as Joyce did. Aristotle pointed out that if the looms wove and the lyres played themselves, we’d need fewer people to do these things. The Luddites, active in 19th-century England, didn’t take the mechanization of textile making lying down. And in 1930, no less an economic sage than John Maynard Keynes fretted about temporary “technological unemployment,” which he feared would grow faster than the number of jobs created by new technologies.

More than a century has passed since that now-celebrated day in 1904 when Joyce’s creation crisscrossed Dublin, and for most of that time technology and jobs have galloped ahead together. Just as Bloom observed, technological advances have not reduced overall employment, though they have certainly cost many people their jobs. But now, with the advent of machines that are infinitely more intelligent and powerful than most people could have imagined a century ago, has the day finally come when technology will leave millions of us permanently displaced?

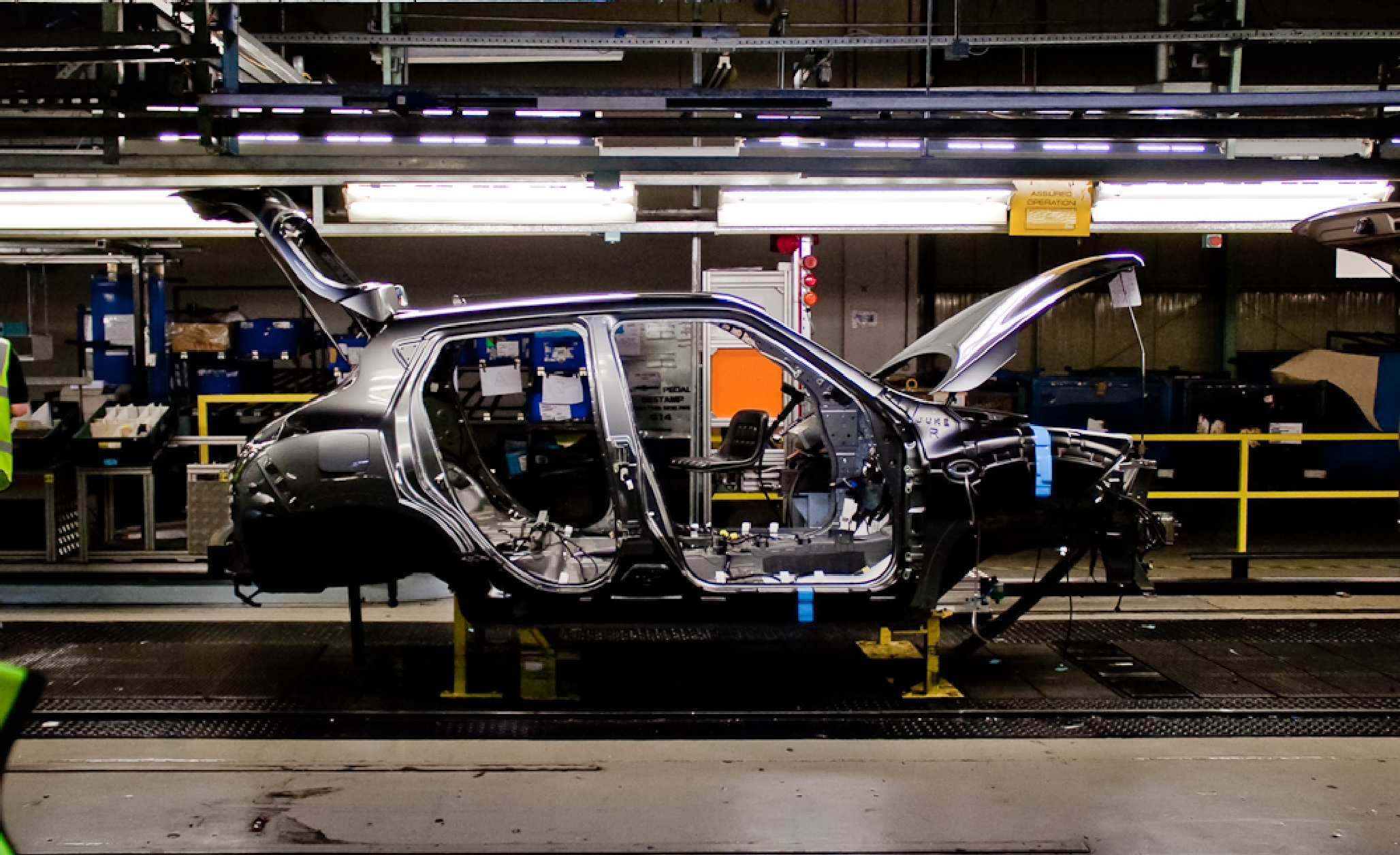

Judging by the popular press, the answer is yes, and there is plenty of alarming data leading some people to support that view. Between January 1990 and January 2010, the United States shed 6.3 million manufacturing jobs, a staggering decrease of 36 percent. Since then, it has regained only about 500,000. Four years after the official end of the Great Recession, unemployment is still running at a recession-like rate of around 7.5 percent, and millions of Americans have given up even looking for work.

Between 1990 and 2010, the U.S. shed 6.3 million manufacturing jobs

Economists, struggling to disentangle the effects of technology, trade, and other forces, don’t have a certain answer to the question of whether this time is different. David Autor, an MIT economist who is one of the leading researchers in the field, argues that trade (imports from China and elsewhere) has increased unemployment, while technology has reshaped the job market into something like an hourglass form, with more jobs in fields such as finance and food service and fewer in between.

History can shed some light on our concerns. It was in the middle of the last century that the United States last seemed to encounter job-destroying technologies on today’s scale. (The economic woes of the 1970s and ’80s were mostly blamed — at least in the popular mind — on Japanese imports.) Automation was a hot topic in the media and among social scientists, pundits, and policymakers. It was a time of unsettlingly rapid technological change, much like our own. Productivity was increasing rapidly, and technical discoveries — think of television and transistors — were being commercialized at an ever more rapid rate. Before World War I, it had taken an average of 30 years for a technological innovation to yield a commercial product. During the early 1960s, it was taking only nine. Yet unemployment in the Kennedy and early Johnson years remained stubbornly high, reaching seven percent at one point. Automation, seen loitering in the vicinity of the industrial crime, appeared a likely culprit.

Life magazine held up an example in 1963, showing a picture of a device called the Milwaukee-Matic, an innovative industrial machining tool, surrounded by the 18 workers it could replace. “There are 180 Milwaukee-Matics in operation in the U.S., and a union official in a plant in which it was installed reported: ‘There is now no need for 40 percent of our toolmakers, 50 percent of our machine operators. Without a shorter work week, 60 percent of our members will be out of a job.’”

A year after the Milwaukee-Matic’s star turn, Lyndon B. Johnson took time from his many troubles — Vietnam, urban unrest — to create the blue-ribbon National Commission on Technology, Automation, and Economic Progress. The New York Times took the enterprise seriously enough to name all the commission members in its pages. The Public Interest, which would become one of the most influential intellectual journals of the postwar era, took up the automation crisis in its debut issue the next year. In one of the essays, the prominent economist Robert Heilbroner argued that rapid technological change had supercharged productivity in agriculture and manufacturing, and now threatened “a whole new group of skills — the sorting, filing, checking, calculating, remembering, comparing, okaying skills — that are the special preserve of the office worker.”

Ultimately, Heilbroner warned, “as machines continue to invade society, duplicating greater and greater numbers of social tasks, it is human labor itself — at least, as we now think of ‘labor’ — that is gradually rendered redundant.” Heilbroner was not the biggest pessimist of the day. Economist Ben B. Seligman’s dark view of the whole business is captured by the title of his book Most Notorious Victory: Man in an Age of Automation (1966) and the volume’s ominous chapter headings, including “A Babel of Calculators,” “Work Without Men,” and “The Trauma We Await.”

In 1967, one futurist predicted that the era of a four-day work week—and 13 weeks of vacation—was nigh.

Reading through the literature of the period, one is struck — and humbled — by how wrong so many smart people could be. Yet some got the story largely right. Automation did not upend the fundamental logic of the economy. But it did disproportionate harm to less-skilled workers. And some of its most important effects were felt not in the economic realm but in the arena of social change.

Many of those who wrote about the automation crisis did so in a very different light than the one in which we see technological change today. With the tailwind of the enormous achievements involved in winning World War II and two subsequent decades of relatively constant prosperity behind them, they were full of confidence in their ability to manage the future. They tended to view the challenge of automation as a problem of abundance — machines were finally yielding the long-promised benefits that would allow human beings to slough off lives of endless and usually unrewarding labor without sacrificing the good things in life. As Life noted, even as manufacturers were reducing payrolls, factory output was growing at a brisk pace. Yes, factory workers and others were hurt in the process, but the midcentury seers mostly looked upon that as a problem to be managed as the nation traveled toward the bright light ahead.

There is a good deal to be said for recalling that point of view at a time when we see so many things through a glass darkly. But doing so also has its hazards. For instance, it led the savants of automation to err in some of their thinking about the future of jobs. To begin with, they misunderstood the nature of abundance itself. Although the principle that human wants are insatiable is enshrined in every introductory economics course, it was somehow forgotten by intellectuals who themselves probably weren’t very materialistic, and who might only have been dimly aware of the great slouching beasts of retailing — the new shopping malls — going up on the edge of town. Heilbroner, writing in The New York Review of Books, worried that even if “we can employ most of the population as psychiatrists, artists, or whatever ... there is still an upper limit on employment due, very simply, to the prospect of a ceiling on the total demand that can be generated for marketable goods and services.”

Sociologist David Riesman, one of the big thinkers who roamed the cultural landscape in those days (he was the lead author of the surprise 1950 bestseller The Lonely Crowd), innocently suggested that the bourgeoisie had already lost “the zest for possessions,” surely one of the worst predictions ever made.

Related to this misunderstanding about consumerism was the idea that the time was nigh when people would hardly have to work at all. Harried families in today’s suburbs will be astonished to learn that some critics even worried about what we would do with all that leisure time.

These ideas weren’t as far fetched as they sound. In the first half of the 20th century, the number of hours worked per week had shrunk by a quarter for the average worker, and in 1967 the futurist Herman Kahn declared that this trend would continue, predicting a four-day work week — and 13 weeks of vacation.

There was a serious debate among many of the era’s leading thinkers about whether all this leisure would be a good thing. Herbert Marcuse, the philosopher who served as an intellectual godfather to the New Left, was optimistic. He saw automation and the attendant increase in leisure as “the first prerequisite for freedom” from the deadening cycle of getting and spending which cost the individual “his time, his consciousness, his dreams.” But Riesman and the influential psychologist Erich Fromm were among those who worried that people would be unfulfilled without work, or that work itself would be unfulfilling in an automated society, with equally unfulfilling leisure the result. As late as 1974, when the U.S. Interior Department drafted the Nationwide Outdoor Recreation Plan, people still thought they could see the leisure society just around the bend. And it was a good thing they could see it coming, too. As the Interior Department intoned, “Leisure, thought by many to be the epitome of paradise, may well become the most perplexing problem of the future.”

“Automation makes us better off collectively by making some of us worse off.”

Advocates on both sides of the automation debate thus fell into the classic extrapolation trap, assuming that the trends they saw in front of them would continue indefinitely. But as the old saying goes, even a train stops. You don’t hear too many of those lucky enough to hold a job today complaining about having too much leisure on their hands.

The same unwarranted extrapolation was at work in thinking about household incomes. Many thoughtful people of the day, with no inkling of what we’d someday lay out for health care, higher education, and pets, just couldn’t imagine that Americans would find a way to spend all the money the technology revolution would enable them to make.

In his review of a prescient work called The Shape of Automation (1966), by Herbert Simon, a manifold genius who would go on to win the Nobel Prize in Economics, Heilbroner scoffed at Simon’s notion that the average family income would reach $28,000 (in 1966 dollars) after the turn of the century: “He has no doubt that these families will have plenty of use for their entire income. ... But why stop there? On his assumptions of a three percent annual growth rate, average family incomes will be $56,000 by the year 2025; $112,000 by 2045; and $224,000 a century from today. Is it beyond human nature to think that at this point (or a great deal sooner), a ceiling will have been imposed on demand — if not by edict, then tacitly? To my mind, it is hard not to picture such a ceiling unless the economy is to become a collective vomitorium.”

Simon responded drily that he had “great respect for the ability of human beings — given a little advance warning — to think up reasonable ways” of spending that kind of money, and to do so “without vomiting.” He was right about that, of course, even though he was wrong about the particular numbers. Nobody at the time foresaw the coming stagnation of middle-class incomes. His estimate of the average family income in 2006 translates into more than $200,000 in current dollars.

Some midcentury commentators on automation did hit close to the mark on major questions. For example, in another blunt response to Heilbroner’s criticism, Simon wrote, “The world’s problems in this generation and the next are problems of scarcity, not of intolerable abundance. The bogeyman of automation consumes worrying capacity that should be saved for real problems — like population, poverty, the Bomb, and our own neuroses.”

In 1966, the Commission on Technology, Automation, and Economic Progress issued a sensible report rejecting the argument that technology was to blame for a great deal of unemployment, although, with the wisdom of Leopold Bloom, it recognized technological change as “a major factor in the displacement and temporary unemployment of particular workers.”

And who were those workers? The answer will be all too familiar: “Unemployment has been concentrated among those with little education or skill, while employment has been rising most rapidly in those occupations generally considered to be the most skilled and to require the most education. This conjunction raises the question whether technological progress may induce a demand for very skilled and highly educated people in numbers our society cannot yet provide, while at the same time leaving stranded many of the unskilled and poorly educated with no future opportunities for employment.”

Nobel Prize–winning physicist George P. Thomson took up the issue with an odd mix of callousness and concern in The Foreseeable Future (1955): “What is to happen to the really definitely stupid man,” he wondered, “or even the man of barely average intelligence?” Although Thomson didn’t count on rising IQs (a worldwide phenomenon known as the Flynn effect), he did seem to foresee the growing need for home care. “There are plenty of jobs — tending the aged is one — where kindness and patience are worth more than brains. A rich state could well subsidize such work.”

Such worries on behalf of blue-collar workers were far from misplaced. Since midcentury, working-class men in particular have been hammered by a changing economy. The economists Michael Greenstone and Adam Looney found that from 1969 to 2009, the median earnings of men ages 25 to 64 dropped by 28 percent after inflation. For those without a high school diploma, the drop was 66 percent. This is to say nothing of lost pensions and health insurance.

Why such big declines? The Great Recession was particularly unkind to men in general, costing twice as many of them their jobs, compared with women. But the job losses date back further, and are attributable to some combination of trade and technology. The flood of women and immigrants entering the work force and competing for jobs also played a role. The big income losses reflect the fact that, when manufacturing jobs vanished, the men who had held them often fell out of the work force for good. In fact, the proportion of men who were not in the formal labor force tripled from 1960 to 2009, to a remarkable 18 percent. (Some of that change, admittedly, was the result of a rise in the number of early retirements and other benign factors.)

LBJ’s commission on automation owed at least some of its insight to the presence among its members of the remarkable sociologist Daniel Bell, another of the era’s big thinkers (who would give us a particularly far-sighted work, The Coming of Post-Industrial Society, in 1973). Bell wrote about the automation debate with characteristic perception, recognizing how much more subtle — yet perhaps equally far reaching — the impact would be.

“Americans, with their tendency to exaggerate new innovations, have conjured up wild fears about changes that automation may bring,” he wrote in Work and Its Discontents: The Cult of Efficiency in America (1956). Citing predictions of “a dismal world of unattended factories turning out mountains of goods which a jobless population will be unable to buy,” he declared flatly, “Such projections are silly.”

Bell acknowledged that there would be disruptions. And he was accurate about their nature, writing that “many workers, particularly older ones, may find it difficult ever again to find suitable jobs. It is also likely that small geographical pockets of the United States may find themselves becoming ‘depressed areas’ as old industries fade or are moved away.” Okay, maybe not “small,” but he was on the right track, and this before the term “Rust Belt” was in common use.

Bell also saw something that all too often eludes futurists, which is that technology would “have enormous social effects.” It would, he said, change the composition of the labor force, “creating a new salariat instead of a proletariat, as automated processes reduce[d] the number of industrial workers required.” He accurately foresaw a world in which “muscular fatigue [would be] replaced by mental tension, the interminable watching, the endless concentration” of modern work, even though the watching now involves a smartphone or computer screen more often than a set of dials on some piece of industrial equipment. Bell also foresaw a different way of judging a worker’s worth, suggesting that “there may arise a new work morality” in which the value of employees would derive from their success at “planning and organizing and the continuously smooth functioning of the operations. Here the team, not the individual worker, will assume a new importance.”

It took a woman, however, to recognize that the diminishing role of brawn had put us on the path toward a world in which gender roles would converge. In a collection of essays Bell edited called Toward the Year 2000: Work in Progress (1967), anthropologist Margaret Mead wrote that traditional gender roles would break down in developed nations, that a cultural and religious backlash might develop, that men might feel threatened when the traditional ways in which they defined masculinity became degendered. (Mead wasn’t right about everything; she also warned of an increase in “overt hostile homosexuality” as one sign of “weakening in the sense of sure sex identity in men.”)

Instead of automating repetitive tasks, technology today is climbing the cognitive ladder, using artificial intelligence and brute processing power to automate (however imperfectly) the functions of travel agents, secretaries, tax preparers, even teachers — while threatening the jobs of some lawyers, university professors, and other professionals who once thought their sheepskins were a bulwark against this sort of thing. Maybe this time, things really are different. In The McKinsey Quarterly in 2011, for example, the economist and latter-day big thinker W. Brian Arthur, a former Stanford professor, talked about a “second economy” of digitized business processes running “vast, silent, connected, unseen, and autonomous” alongside the physical economy: “The second economy will certainly be the engine of growth and the provider of prosperity for the rest of this century and beyond, but it may not provide jobs, so there may be prosperity without full access for many. This suggests to me that the main challenge of the economy is shifting from producing prosperity to distributing prosperity.”

Arthur’s argument echoes a collection of midcentury seers, the grandly-named Ad Hoc Committee on the Triple Revolution, whose members included Heilbroner, scientist Linus Pauling, and social scientist Gunnar Myrdal. “The traditional link between jobs and incomes is being broken,” the committee wrote in its manifesto. “The economy of abundance can sustain all citizens in comfort and economic security whether or not they engage in what is commonly reckoned as work,” the committee continued, arguing for “an unqualified commitment to provide every individual and every family with an adequate income as a matter of right.”

Echoing the Triple Revolution manifesto, Arthur argued that “the second economy will produce wealth no matter what we do,” and that the challenge had become “distributing that wealth.” For centuries, he noted, “wealth has traditionally been apportioned in the West through jobs, and jobs have always been forthcoming. When farm jobs disappeared, we still had manufacturing jobs, and when these disappeared we migrated to service jobs. With this digital transformation, this last repository of jobs is shrinking — fewer of us in the future may have white-collar business process jobs — and we face a problem.”

Maybe this time, things really are different.

Perhaps the biggest lesson we can learn from the midcentury thinkers who worried about automation is that while there is cause for concern, there is no other way but forward. Like trade, automation makes us better off collectively by making some of us worse off. So the focus of our concern should be on those injured by the robots, even if the wounds are “only” economic.

The issue, in other words, isn’t technological but distributional — which is to say political. Automation presents some of us with a kind of windfall. It would be not just churlish but shortsighted if we didn’t share this windfall with those who haven’t been so lucky. This doesn’t mean we must embrace the utopianism of the Triple Revolution manifesto or return to the despised system of open-ended welfare abolished during the Clinton years. But inevitably, if only to maintain social peace, it will mean a movement toward some of the universal programs — medical coverage, long-term care insurance, low-cost access to higher education — that have helped other advanced countries shelter their work forces from economic shocks better than the United States has, and control costs while they’re at it.

The robots will surely keep coming, and keep doing more and more of the work we long have done. But one thing they won’t be able to do — at least not anytime soon — is tell us what we owe each other. Surely we can figure that out for ourselves.

—

Daniel Akst writes the weekly R&D column in The Wall Street Journal.

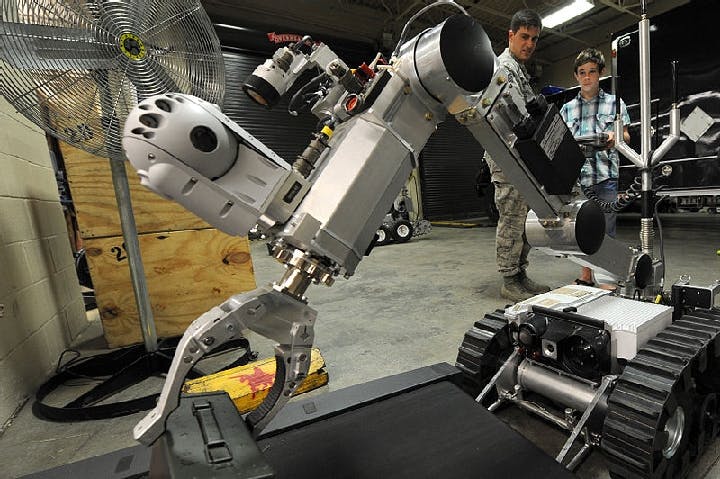

Cover photo courtesy of Wikimedia Commons