Winter 2014

A less-than-splendid little war

– Andrew J. Bacevich

How you should actually think about the Persian Gulf War.

"Nearly a decade after its conclusion,” observes Frank Rich of The New York Times, “the Persian Gulf War is already looking like a footnote to American history.” Rich’s appraisal of Operation Desert Storm and the events surrounding it manages to be, at once, accurate and massively wrong.

Rich is correct in the sense that, 10 years on, the war no longer appears as it did in 1990 and 1991: a colossal feat of arms, a courageous and adeptly executed stroke of statesmanship, and a decisive response to aggression that laid the basis for a new international order. The “official” view of the war, energetically promoted by senior U.S. government figures and military officers and, at least for a time, echoed and amplified by an exultant national media, has become obsolete.

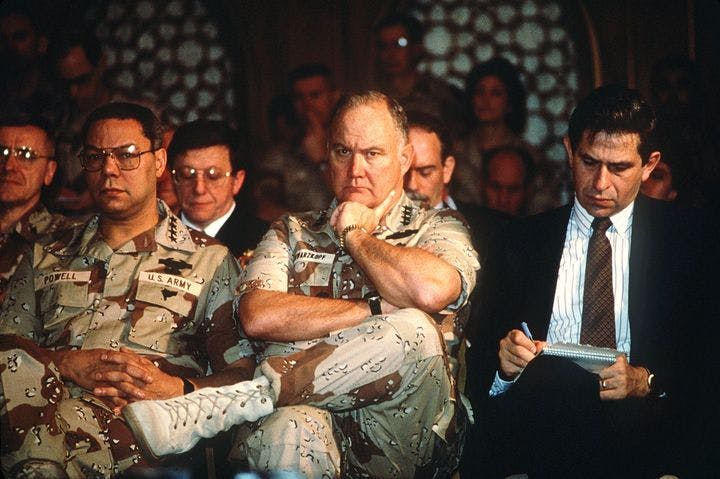

In outline, that official version was simplicity itself: unprovoked and dastardly aggression; a small, peace-loving nation snuffed out of existence; a line drawn in the sand; a swift and certain response by the United States that mobilizes the international community to put things right. The outcome, too, was unambiguous. Speaking from the Oval Office on February 28, 1991, to announce the suspension of combat operations, President George H.W. Bush left no room for doubt that the United States had achieved precisely the outcome it had sought: “Kuwait is liberated. Iraq’s army is defeated. Our military objectives are met.” Characterizing his confrontation with Saddam Hussein’s army, General Norman Schwarzkopf used more colorful language to make the same point: “We’d kicked this guy’s butt, leaving no doubt in anybody’s mind that we’d won decisively.”

In the war’s immediate aftermath, America’s desert victory seemed not only decisive but without precedent in the annals of military history. So stunning an achievement fueled expectations that Desert Storm would pay dividends extending far beyond the military sphere. Those expectations — even more than the action on the battlefield — persuaded Americans that the war marked a turning point. In a stunning riposte to critics who had argued throughout the 1980s that the United States had slipped into a period of irreversible decline, the Persian Gulf War announced emphatically that America was back on top.

In a single stroke, then, the war appeared to heal wounds that had festered for a generation. Reflecting the views of many professional officers, General Colin Powell, chairman of the Joint Chiefs of Staff, expressed his belief that the demons of the Vietnam War had at long last been exorcised. Thanks to Operation Desert Storm, he wrote, “the American people fell in love again with their armed forces.” Indeed, references to “the troops” — a phrase to which politicians, pundits, and network anchors all took a sudden liking — conveyed a not-so-subtle shift in attitude toward soldiers and suggested a level of empathy, respect, and affection that had been absent, and even unimaginable, since the late 1960s.

Bush himself famously proclaimed that, with its victory in the Persian Gulf, the United States had at long last kicked the so-called Vietnam syndrome. That did not mean the president welcomed the prospect of more such military adventures. If anything, the reverse was true: Its military power unshackled, the United States would henceforth find itself employing force less frequently. “I think because of what has happened, we won’t have to use U.S. forces around the world,” Bush predicted during his first postwar press conference. “I think when we say something that is objectively correct, ... people are going to listen.”

To the president and his advisers, the vivid demonstration of U.S. military prowess in the Gulf had put paid to lingering doubts about American credibility. Its newly minted credibility endowed the United States with a unique opportunity: not only to prevent the recurrence of aggression but to lay the foundation for what Bush called a new world order. American power would shape that order, and American power would guarantee the United States a preeminent place in it. America would “reach out to the rest of the world,” Bush and his national security adviser Brent Scowcroft wrote, but, in doing so, America would “keep the strings of control tightly in [U.S.] hands.”

That view accorded precisely with the Pentagon’s own preferences. Cherishing their newly restored prestige, American military leaders were by no means eager to put it at risk. They touted the Gulf War not simply as a singular victory but as a paradigmatic event, a conflict that revealed the future of war and outlined the proper role of U.S. military power. Powell and his fellow generals rushed to codify the war’s key “lessons.” Clearly stated objectives related to vital national interests, the employment of overwhelming force and superior technology, commanders insulated from political meddling, a predesignated “exit strategy” — the convergence of all these factors had produced a brief, decisive campaign, fought according to the norms of conventional warfare and concluded at modest cost and without moral complications. If the generals got their way, standing ready to conduct future Desert Storms would henceforth define the U.S. military’s central purpose.

“America’s love affair with the troops turned out to be more an infatuation than a lasting commitment.”

Finally, the war seemed to have large implications for domestic politics, although whether those implications were cause of celebration or despondency depended on one’s partisan affiliation. In the war’s immediate aftermath, Bush’s approval ratings rocketed above 90 percent. Most experts believed that the president’s adept handling of the Persian Gulf crisis all but guaranteed his election to a second term.

Subsequent events have not dealt kindly with those initial postwar expectations. Indeed, the 1992 presidential election — in which Americans handed the architect of victory in the Gulf his walking papers — hinted that the war’s actual legacy would be different than originally advertised, and the fruits of victory other than expected. Bill Clinton’s elevation to the office of commander in chief was only one among several surprises.

For starters, America’s love affair with the troops turned out to be more an infatuation than a lasting commitment. A series of scandals — beginning just months after Desert Storm with the U.S. Navy’s infamous Tailhook convention in 1991 — thrust the military into the center of the ongoing Kulturkampf. Instead of basking contentedly in the glow of victory, military institutions found themselves pilloried for being out of step with enlightened attitudes on such matters as gender and sexual orientation. In early 1993, the generals embroiled themselves in a nasty public confrontation with their new commander in chief over the question of whether gays should serve openly in the military. The top brass prevailed. But “don’t ask, don’t tell” would prove to be a Pyrrhic victory.

The real story of military policy in the 1990s was the transformation of the armed services from bastions of masculinity (an increasingly suspect quality) into institutions that were accommodating to women and “family friendly.” The result was a major advance in the crusade for absolute gender equality, secured by watering down, or simply discarding, traditional notions of military culture and unit cohesion. By decade’s end, Americans took it as a matter of course that female fighter pilots were flying strike missions over Iraq, and that a terrorist attack on an American warship left female sailors among the dead and wounded.

As the military became increasingly feminized, young American men evinced a dwindling inclination to serve. The Pentagon insisted that the two developments were unrelated. Although the active military shrank by a third in overall size during the decade following the Gulf War, the services were increasingly hard-pressed to keep the ranks full by the end of the 1990s. Military leaders attributed the problem to a booming economy: The private sector offered a better deal. Their solution was to improve pay and benefits, to deploy additional platoons of recruiters, and to redouble their efforts to market their “product.” To burnish its drab image, the U.S. Army, the most straitened of the services, even adopted new headgear: a beret. With less fanfare, each service also began to relax its enlistment standards.

Bush’s expectation (and Powell’s hope) that the United States would rarely employ force failed to materialize. The outcome of the Persian Gulf War — and, more significantly, the outcome of the Cold War — created conditions more conducive to disorder than to order, and confronted both Bush and his successor with situations that each would view as intolerable. Because inaction would undermine U.S. claims to global leadership and threaten to revive isolationist habits, it was imperative that the United States remain engaged. As a result, the decade following victory in the Gulf became a period of unprecedented American military activism.

The motives for intervention varied as widely as the particular circumstances on the ground. In 1991, Bush sent U.S. troops into northern Iraq to protect Kurdish refugees fleeing from Saddam Hussein. Following his electoral defeat in 1992, he tasked the military with a major humanitarian effort in Somalia: to bring order to a failed state and aid to a people facing mass starvation. Not to be outdone, President Bill Clinton ordered the military occupation of Haiti, to remove a military junta from power and to “restore” democracy. Moved by the horrors of ethnic cleansing, Clinton bombed and occupied Bosnia. In Rwanda he intervened after the genocide there had largely run its course. Determined to prevent the North Atlantic Treaty Organization (NATO) from being discredited, he fought a substantial war for Kosovo and provided Slobodan Milosevic with a pretext for renewed ethnic cleansing, which NATO’s military action did little to arrest.

In lesser actions, Clinton employed cruise missiles to retaliate (ineffectually) against Saddam Hussein, for allegedly plotting to assassinate former President Bush and against Osama Bin Laden, for terrorist attacks on two U.S. embassies in Africa in 1998. As the impeachment crisis loomed at the end of 1998, the president renewed hostilities against Iraq; the brief December 1998 air offensive known as Operation Desert Fox gave way to a persistent but desultory bombing campaign that sputtered on to the very end of his presidency.

Bush’s expectation that the U.S. would rarely employ force failed to materialize

All those operations had one common feature: Each violated the terms of the so-called ‘Powell Doctrine’ regarding the use of force. The “end state” sought by military action was seldom defined clearly and was often modified at midcourse. (In Somalia, the mission changed from feeding the starving to waging war against Somali warlords.) More often than not, intervention led not to a prompt and decisive outcome but to open-ended commitments. (President Clinton sent U.S. peacekeepers into Bosnia in 1995 promising to withdraw them in a year; more than five years later, when he left office, GIs were still garrisoning the Balkans.)

In contrast to Powell’s preference for using overwhelming force, the norm became to expend military power in discrete increments — to punish, to signal resolve, or to influence behavior. (Operation Allied Force, the American-led war for Kosovo in 1999, proceeded on the illusory assumption that a three- or four-day demonstration of airpower would persuade Slobodan Milosevic to submit to NATO’s will.) Nor were American soldiers able to steer clear of the moral complications that went hand in hand with these untidy conflicts. (The United States and NATO won in Kosovo by bringing the war home to the Serbian population — an uncomfortable reality from which some sought escape by proposing to waive the principle of noncombatant immunity.)

In short, the events that dashed President Bush’s dreams of a new world order also rendered the Powell Doctrine obsolete and demolished expectations that the Persian Gulf War might provide a template for the planning and execution of future U.S. military operations. By the fall of 2000, when a bomb-laden rubber boat rendered a billion-dollar U.S. Navy destroyer hors de combat and killed 17 Americans, the notion that the mere possession of superior military technology and know-how gave the United States the ultimate trump card rang hollow.

Judged in terms of the predictions and expectations voiced in its immediate aftermath, the Persian Gulf War does seem destined to end up as little more than a historical afterthought. But unburdening the war of those inflated expectations yields an altogether different perspective on the actual legacy of Desert Storm. Though it lacks the resplendence that in 1991 seemed the war’s proper birthright, the legacy promises to be both important and enduring.

To reach a fair evaluation of the war’s significance, Americans must, first of all, situate it properly in the grand narrative of U.S. military history. Desert Storm clearly does not rank with military enterprises such as the Civil War or World War II. Nor does the abbreviated campaign in the desert bear comparison with other 20th-century conflicts such as World War I, Korea, and Vietnam.

Rather, the most appropriate comparison is with that other “splendid little war,” the Spanish-American War of 1898. Norman Schwarzkopf’s triumph over the obsolete army of Saddam Hussein is on a par with Admiral George Dewey’s fabled triumph over an antiquated Spanish naval squadron at Manila Bay. Both qualify as genuine military victories. But the true measure of each is not the economy and dispatch with which U.S. forces vanquished their adversary but the entirely unforeseen, and largely problematic, consequences to which each victory gave rise.

In retrospect, the Spanish-American War — not just Dewey at Manila Bay, but Teddy Roosevelt leading the charge up San Juan Hill and General Nelson Miles “liberating” Puerto Rico — was a trivial military episode. And yet, the war marked a turning point in U.S. history. The brief conflict with Spain ended any compunction that Americans may have felt about the feasibility or propriety of imposing their own norms and values on others.

With that war, the nation enthusiastically shouldered its share of the “white man’s burden,” to preside thereafter over colonies and client states in the Caribbean and the Pacific. The war saddled the American military with new responsibilities to govern that empire, and with one large, nearly insoluble strategic problem: how to defend the Philippines, the largest of the Spanish possessions to which the United States had laid claim.

The Spanish-American War propelled the United States into the ranks of great powers. Notable events of the century that followed — including an ugly campaign to pacify the Philippines, a pattern of repetitive military intervention in the Caribbean, America’s tortured relationship with Cuba, and three bloody Asian wars fought in three decades — all derive, to a greater or lesser extent, from what occurred in 1898. And not one of those events was even remotely visible when President William McKinley set out to free Cubans from the yoke of Spanish oppression.

A similar case can be made with regard to the Persian Gulf War. However trivial the war was in a strictly military sense, it is giving birth to a legacy as significant and ambiguous as that of the Spanish-American War. And, for that reason, to consign the war to footnote status is to shoot wide of the mark.

The legacy of the Gulf War consists of at least four distinct elements. First, the war transformed Americans’ views about armed conflict: about the nature of war, the determinants of success, and the expectations of when and how U.S. forces should intervene.

Operation Desert Storm seemingly reversed one of the principal lessons of Vietnam — namely, that excessive reliance on technology in war is a recipe for disaster. In the showdown with Iraq, technology proved crucial to success. Technology meant American technology; other members of the coalition (with the partial exception of Great Britain) lagged far behind U.S. forces in technological capacity. Above all, technology meant American airpower; it was the effects of the bombing campaign preceding the brief ground offensive that provided the real “story” of the Gulf War. After coalition fighter and bomber forces had isolated, weakened, and demoralized Saddam Hussein’s army, the actual liberation of Kuwait seemed hardly more than an afterthought.

With Operation Desert Storm, a century of more of industrial age warfare came to an end and a new era of information age warfare beckoned — a style of warfare, it went without saying, to which the United States was uniquely attuned. In the information age, airpower promised to be to warfare what acupuncture was to medicine: a clean, economical, and nearly painless remedy for an array of complaints.

Gone, apparently, were the days of slugfests, stalemates, and bloodbaths. Gone, too, were the days when battlefield mishaps — a building erroneously bombed, an American soldier’s life lost to friendly fire — could be ascribed to war’s inherent fog and friction. Such occurrences now became inexplicable errors, which nonetheless required an explanation and an accounting. The nostrums of the information age equate information to power. They dictate that the greater availability of information should eliminate uncertainty and enhance the ability to anticipate and control events. Even if the key piece of information becomes apparent only after the fact, someone — commander or pilot or analyst — “should have known.”

Thus did the Persian Gulf War feed expectations of no-fault operations. The Pentagon itself encouraged such expectations by engaging in its own flights of fancy. Doctrine developed by the Joint Chiefs of Staff in the 1990s publicly committed U.S. forces to harnessing technology to achieve what it called “full spectrum dominance”: the capability to prevail, quickly and cheaply, in any and all forms of conflict.

This technological utopianism has, in turn, had two perverse effects. The first has been to persuade political elites that war can be — and ought to be — virtually bloodless. As with an idea so stupid only an intellectual can believe it, the imperative of bloodless war will strike some as so bizarre that only a bona fide Washington insider (or technogeek soldier) could take it seriously. But as the war for Kosovo demonstrated in 1999, such considerations now have a decisive effect on the shape of U.S. military operations. How else to explain a war, allegedly fought for humanitarian purposes, in which the commander in chief publicly renounced the use of ground troops and restricted combat aircraft to altitudes at which their efforts to protect the victims of persecution were necessarily ineffective?

Technological utopianism has also altered fundamentally the moral debate about war and the use of force. During the decades following Hiroshima, that debate centered on assessing the moral implications of nuclear war and nuclear deterrence — an agenda that put moral reasoning at the service of averting Armageddon. Since the Persian Gulf War, theologians and ethicists, once openly skeptical of using force in all but the direst circumstances, have evolved a far more expansive and accommodating view: They now find that the United States has a positive obligation to intervene in places remote from any tangible American interests (the Balkans and sub-Saharan Africa, for example). More than a few doves have developed markedly hawkish tendencies.

The second element of the Gulf War’s legacy is a new consensus on the relationship between military power and America’s national identity. In the aftermath of Desert Storm, military preeminence has become, as never before, an integral part of that identity. The idea that the United States presides as the world’s only superpower — an idea that the Persian Gulf War more than any other single event made manifest — has found such favor with the great majority of Americans that most can no longer conceive of an alternative.

That the U.S. military spending now exceeds the combined military spending of all the other leading powers, whether long-standing friends or potential foes, is a fact so often noted that it has lost all power to astonish. It has become noncontroversial, an expression of the way things are meant to be, and, by common consent, of the way they ought to remain. Yet in the presidential campaign of 2000, both the Democratic and the Republican candidates agreed that the current level of defense spending — approaching $300 billion per year — is entirely inadequate. Tellingly, it was the nominee of the Democratic Party, the supposed seat of antimilitary sentiment, who offered the more generous plan for boosting the Pentagon’s budget. The campaign included no credible voices suggesting that the United States might already be spending too much on defense.

The new consensus on the military role of the United States — a consensus forged at a time when the actual threats to the nation’s well-being are fewer than in any period since the 1920s — turns traditional American thinking about military power on its head. Although the Republic came into existence through a campaign of violence, the Founders did not view the experiment upon which they had embarked as an exercise in accruing military might. If anything, the reverse was true. By insulating America (politically but not commercially) from the Old World’s preoccupations with wars and militarism, they hoped to create in the New World something quite different.

Even during the Cold War, the notion lingered that, when it came to military matters, America was indeed intended to be different. The U.S. government classified the Cold War as an “emergency,” as if to imply that the level of mobilization it entailed was only a temporary expedient. Even so, cold warriors with impeccable credentials — Dwight D. Eisenhower prominent among them — could be heard cautioning their fellow citizens to be wary of inadvertent militarism. The fall of the Berlin Wall might have offered an opportunity to reflect on Eisenhower’s Farewell Address. But victory in the Gulf, which seemed to demonstrate that military power was ineffably good, nipped any such inclination in the bud. When it came to Desert Storm, what was not to like?

Indeed, in some quarters, America’s easy win over Saddam Hussein inspired the belief that the armed forces could do much more henceforth than simply “fight and win the nation’s wars.” To demonstrate its continuing relevance in the absence of any plausible adversary, the Pentagon in the 1990s embraced an activist agenda and implemented a new “strategy of engagement” whereby U.S. forces devote their energies to “shaping the international environment.” The idea, according to Secretary of Defense William Cohen, is “to shape people’s opinions about us in ways that are favorable to us. To shape events that will affect our livelihood and our security. And we can do that when people see us, they see our power, they see our professionalism, they see our patriotism, and they say that’s a country that we want to be with.”

American paratroopers jumping in Kazakhstan, U.S. Special Forces training peacekeepers in Nigeria and counter-narcotic battalions in Colombia, and U.S. warships stopping for fuel at the port of Aden are all part of an elaborate and ambitious effort to persuade others to “be with” the world’s preeminent power. Conceived in the Pentagon and directed by senior U.S. military commanders, that effort proceeds quite openly, the particulars duly reported in the press. Few Americans pay it much attention. Their lack of interest suggests that the general public has at least tacitly endorsed the Pentagon’s strategy, and is one measure of how comfortable Americans have become, a decade after the Persian Gulf War, with wielding U.S. military power.

The third element of the Gulf War’s legacy falls into the largely misunderstood and almost completely neglected province of civil-military relations. To the bulk of the officer corps, Desert Storm served to validate the Powell Doctrine. It affirmed the military nostalgia that had taken root in the aftermath of Vietnam — the yearning to restore the concept of self-contained, decisive conventional war, conducted by autonomous, self-governing military elites. And yet, paradoxically, the result of Desert Storm has been to seal the demise of that concept. In the aftermath of the Persian Gulf War, the boundaries between war and peace, soldiers and civilians, combatants and noncombatants, and the military and political spheres have become more difficult than ever to discern. In some instances, those boundaries have all but disappeared.

Operation Allied Force in the Balkans in 1999 was the fullest expression to date of that blurring phenomenon. During the entire 11-week campaign, the Clinton administration never budged from its insistence that the military action in progress did not really constitute a war. As the bombing of Serbia intensified, it became unmistakably clear that the United States and its NATO partners had given greater priority to protecting the lives of their own professional soldiers than to aiding the victims of ethnic cleansing or to avoiding noncombatant casualties. When NATO ultimately prevailed, it did so by making war not on the Yugoslavian army but on the Serbian people.

“Boundaries between war and peace, soldiers and civilians, combatants and noncombatants, and the military and political spheres have become more difficult than ever to discern.”

The consequences of this erosion of civil-military distinctions extend well beyond the operational sphere. One effect has been to undermine the military profession’s traditional insistence on having wide latitude to frame the policies that govern the armed forces. At the same time, in areas quite unrelated to the planning and conduct of combat operations, policymakers have conferred ever greater authority on soldiers. Thus, although the Persian Gulf War elevated military credibility to its highest point in memory, when it comes to policy matters even remotely touching on gender, senior officers have no choice but to embrace the politically correct position — which is that in war, as in all other human endeavors, gender is irrelevant. To express a contrary conviction is to imperil one’s career, something few generals and admirals are disposed to do.

Yet even as civilians dismiss the military’s accumulated wisdom on matters relating to combat and unit cohesion, they thrust upon soldiers a wider responsibility for the formulation of foreign policy. The four-star officers presiding over commands in Europe, the Middle East, Latin America, and the Pacific have displaced the State Department as the ultimate arbiters of policy in those regions.

The ill-fated visit of the USS Cole to Aden last October, for example, came not at the behest of some diplomatic functionary but on the order of General Anthony Zinni, the highly regarded Marine then serving as commander in chief (CINC) of U.S. Central Command, responsible for the Persian Gulf. Had Zinni expressed reservations about having a mixed-gender warship in his area of operations, he would, of course, have been denounced for commenting on matters beyond his purview. But no one would presume to say that Zinni was venturing into areas beyond his professional competence by dispatching the Cole in pursuit of (in his words) “more engagement” — part of a larger, misguided effort to befriend the Yemeni government.

Before his retirement, Zinni openly, and aptly, referred to the regional CINCs as “proconsuls.” It’s a boundary-blurring term: Proconsuls fill an imperial mandate, though Americans assure themselves that they neither possess nor wish to acquire an empire. Zinni is honest enough to acknowledge that, in the post-Cold War world, the CINC’s function is quasi-imperial — like the role of General Douglas MacArthur presiding over occupied Japan. The CINC/proconsul projects American power, maintains order, enforces norms of behavior, and guards American interests. He has plainly become something more than a mere soldier. He straddles the worlds of politics, diplomacy, and military affairs, and moves easily among them. In so doing, he has freed himself from the strictures that once defined the limits of soldierly prerogatives.

Thus, when he stepped down as CINC near the end of the 2000 presidential campaign, Zinni felt no compunction about immediately entering the partisan fray. He announced that the policies of the administration he had served had all along been defective. With a clutch of other recently retired senior officers, he threw his support behind George W. Bush, an action intended to convey the impression that Bush was the military’s preferred candidate.

‘The Persian Gulf War and victory in the Cold War were evidence that, despite two world wars, genocide, and totalitarianism, the 20th century had turned out basically all right.’

Some critics have warned that no good can come of soldiers’ engaging in partisan politics. Nonsense, is the response: When General Zinni endorses Bush, and when General Schwarzkopf stumps the state of Florida and denounces Democrats for allegedly disallowing military absentee ballots, they are merely exercising their constitutionally protected rights as citizens. The erosion of civil-military boundaries since the Persian Gulf War has emboldened officers to engage in such activities, and the change reflects an increasingly overt politicization of the officer corps.

According to a time-honored tradition, to be an American military professional was to be apolitical. If, in the past, the occasional general tossed his hat into the ring — as Dwight D. Eisenhower did in 1952 — his party affiliation came as a surprise, and almost an afterthought. In the 1990s, with agenda-driven civilians intruding into military affairs and soldiers assuming the mantle of imperial proconsuls, the earlier tradition went by the board. And that, too, is part of the Gulf War’s legacy.

But perhaps the most important aspect of the legacy is the war’s powerful influence on how Americans now view both the immediate past and the immediate future. When it occurred near the tail end of the 20th century, just as the Cold War’s final chapter was unfolding, the victory in the desert seemed to confirm that the years since the United States bounded on to the world stage in 1898 had been the “American Century” after all. Operation Desert Storm was interpreted as an indisputable demonstration of American superiority and made it plausible to believe once again that the rise of the United States to global dominance and the triumph of American values were the central themes of the century then at its close.

In the collective public consciousness, the Persian Gulf War and the favorable conclusion of the Cold War were evidence that, despite two world wars, multiple episodes of genocide, and the mind-boggling criminality of totalitarianism, the 20th century had turned out basically all right. The war let Americans see contemporary history not as a chronicle of hubris, miscalculation, and tragedy, but as a march of progress, its arc ever upward. And that perspective — however much at odds with the postmodernism that pervades fashionable intellectual circles — fuels the grand expectations that Americans have carried into the new millennium.

Bill Clinton has declared the United States “the indispensable nation.” According to Madeleine Albright, America has become the “organizing principal” of the global order. “If we have to use force,” said Albright, “it is because we are America; we are the indispensable nation. We stand tall. We see further than other countries into the future.” Such sentiments invite derision in sophisticated precincts. But they play well in Peoria, and accord precisely with what most Americans want to believe.

In 1898, a brief, one-sided war with Spain persuaded Americans, who knew their intentions were benign, that it was their destiny to shoulder a unique responsibility and uplift “little brown brother.” Large complications ensued. In 1991, a brief, one-sided war with Iraq persuaded Americans, who thought they had deciphered the secrets of history, that the rising tide of globalization will bring the final triumph of American values. A decade after the fact, events in the Persian Gulf and its environs — the resurgence of Iraqi power under Saddam Hussein and the never-ending conflict between Israelis and Arabs — suggest that large complications will ensue once again.

As Operation Desert Storm recedes into the distance, its splendor fades. But its true significance comes into view.

—

Andrew J. Bacevich is a professor of international relations and history at Boston University. He is the author of several books, including most recently Breach of Trust: How Americans Failed Their Soldiers and Their Country (2013). Photo courtesy of Wikimedia Commons